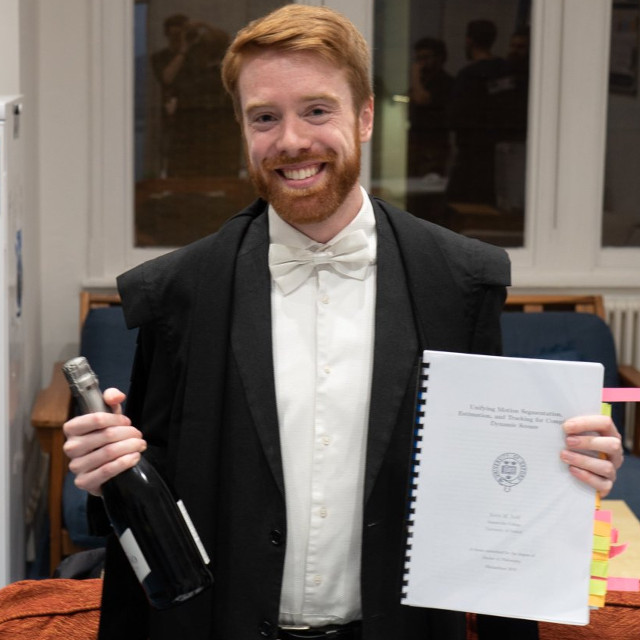

Dr. Kevin Judd

Share

Congratulations to Kevin for completing his D.Phil. on Unifying Motion Segmentation, Estimation, and Tracking for Complex Dynamic Scenes. Many thanks to his examiners Prof. Jonathan Kelly and Prof. Victor Adrian Prisacariu for their time and effort. Well done, Dr. Judd!

- Publication

- Type

- D.Phil. Thesis

- School

- University of Oxford

- Date

Abstract

Visual navigation is an critical task in mobile robotics. To navigate through an area, a robot must understand where it is, what is around it, and how to get to its goal. Integral to each of these questions is the task of motion analysis. Estimating the egomotion of a sensor is a well-studied problem, but only recently have similar questions about the other dynamic objects in the scene been addressed. Understanding the static structure of an environment is crucial to navigating through it, but understanding the motions of other dynamic objects is crucial to doing so safely.

Previous work has developed techniques to estimate the motion of a moving camera in a largely static environment and to segment or track motions in a dynamic scene using known camera motions. It is more challenging to estimate the unknown motions of the camera and the dynamic scene simultaneously, and this thesis focuses on addressing this multimotion estimation problem (MEP).

The estimation of third-party dynamic motions is much more difficult than estimating the sensor egomotion. The static assumption that applies to the background of a scene has no analogue for these dynamic objects, whose observed motions comprise both their arbitrary real-world motions and the camera egomotion.

This thesis introduces Multimotion Visual Odometry (MVO), a novel multimotion estimation pipeline that incorporates motion segmentation and tracking techniques into the traditional visual odometry pipeline in order to estimate the full SE(3) trajectory of every motion in the scene, including the egomotion. MVO segments and estimates all motions simultaneously, treating them all equivalently until the segmentation converges, after which the egomotion can be determined and used to calculate all other motions in a geocentric frame.

Highly dynamic scenes also tend to exhibit significant occlusions, which make accurate motion estimation and object tracking even more challenging. A physically founded continuous motion prior introduced to the MVO pipeline to extrapolate temporarily occluded motions and reacquire them when they become unoccluded. This motion closure procedure maintains trajectory consistency and allows the pipeline to estimate and track multiple SE(3) motions, even in the presence of occlusion.

The estimation accuracy of MVO is evaluated quantitatively and qualitatively using real-world data from stereo RGB and event cameras in several highly dynamic multimotion scenes. Much of this data was published in the Oxford Multimotion Dataset (OMD), which was designed to explore the MEP and serve as a scaffold for the development and evaluation of new multimotion estimation techniques.